Overview

Google Analytics has introduced a new cookie format (v2) to their Cookie Stream Cookie that changes how tracking data is structured. This guide explains the differences between the old GS1 format and the new GS2 format, and demonstrates how to parse both versions

Why Did Google Change the Cookie Format?

Google’s transition from the GS1 to GS2 cookie format wasn’t arbitrary. Here are several strategic reasons behind this change:

1. Improved Extensibility

The new format with single-letter prefixes allows Google to easily add new tracking parameters without breaking existing implementations. With GS1’s fixed positional format, adding new parameters would require updating all parsers to handle additional positions.

2. Self-Documenting Structure

The prefix system makes cookies more readable and debuggable. Instead of remembering that position 5 is “lastHitTimestamp”, developers can see t1746825440 and understand what t represents.

3. Version Control

The explicit GS2 prefix enables Google to:

- Track which format version is being used

- Potentially introduce GS3, GS4, etc. in the future

- Maintain backward compatibility during transitions

4. Data Optimization

The dollar sign separator and prefix system can be more efficient:

- Empty values can be omitted entirely (no need for consecutive dots)

- Parameters can appear in any order

- Optional parameters don’t require placeholder positions

5. Better Error Handling

With prefixed values, parsers can:

- Ignore unknown prefixes without breaking

- Handle missing parameters gracefully

- Validate data more effectively

6. Alignment with Modern Standards

The key-value pair approach (prefix + value) aligns better with:

- JSON-like structures

- URL parameter formats

- Modern data serialization patterns

7. Align with other Google Cookies

These format updates aren’t isolated to Google Analytics. Google is systematically updating various tracking mechanisms to:

Google has recently updated several other cookie formats as well, most notably the Google Click ID (gclid) cookie. These changes follow a similar pattern of modernization:

This evolution reflects Google’s need for a more flexible, maintainable tracking system as Google Analytics continues to evolve and add new features.

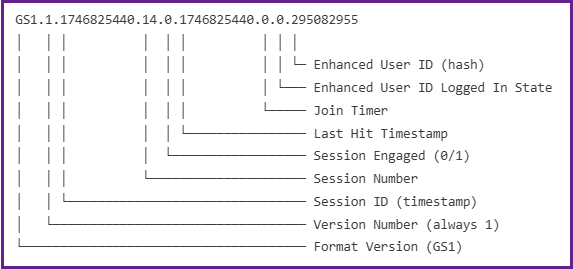

Old Format (GS1)

The original Google Analytics cookie format uses a straightforward dot-separated structure:

GS1.1.1746825440.14.0.17468254406.0.0.295082955Characteristics:

- Uses dots (.) as separators throughout

- Begins with “GS1” (Google Stream Version 1)

- Values are in fixed positions without prefixes

- Each position has a specific meaning

Structure Breakdown:

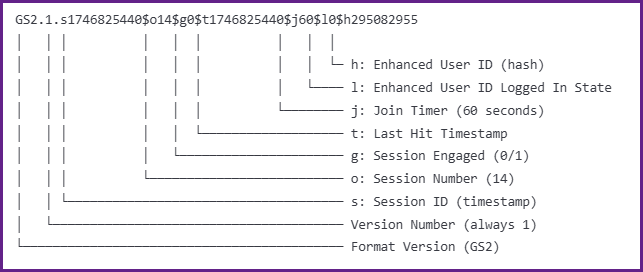

New Format (GS2)

Google Analytics has transitioned to a more flexible cookie format that uses prefixed values:

GS2.1.s1746825440$o14$g0$t1746825440$j60$l0$h295082955Characteristics:

- Uses dollar signs (

$) as separators after the header - Begins with “GS2” (Google Stream Version 2)

- Each value has a single-letter prefix identifier

- More extensible and self-documenting format

Structure Breakdown:

Prefix Meanings:

s– Session IDo– Session Numberg– Session Engagedt– Last Hit Timestampj– Join Timerl– Enhanced User ID Logged In Stateh– Enhanced User ID (hash)d– Join ID (not present in this example, appears in some cookies)

Complete Parser Implementation

I’ve created a universal parser that handles both Google Analytics cookie formats. This parser is based on my previous work that originally handled only the GS1 format, which I’ve now adapted to automatically detect and parse both GS1 and GS2 versions.

The parser provides:

- Automatic format detection (GS1 vs GS2)

- Consistent output structure regardless of input format

- URL encoding support for dollar signs (

%24) - Fallback handling for missing values

I’m providing both ES6 and ES5 versions to ensure compatibility across different JavaScript environments:

ES6 Version (Modern JavaScript)

const parseGoogleStreamCookie = str => {

const mapping = {

s: "sessionId",

o: "sessionNumber",

g: "sessionEngaged",

t: "lastHitTimestamp",

j: "joinTimer",

l: "enhancedUserIdLoggedInState",

h: "enhancedUserId",

d: "joinId"

};

const [version, , data, ...rest] = str.split('.');

const keys = ['s', 'o', 'g', 't', 'j', 'l', 'h', 'd'];

if (version === 'GS1') {

return Object.fromEntries(

keys.map((k, i) => [mapping[k], [data, ...rest][i] || ''])

);

}

return Object.fromEntries(

data.replace(/%24/g, '$')

.split('$')

.map(s => [mapping[s[0]] || s[0], decodeURIComponent(s.slice(1))])

);

};ES5 Version (Legacy Browser/GTM Support)

function parseGoogleStreamCookie(str) {

var mapping = {

s: "sessionId",

o: "sessionNumber",

g: "sessionEngaged",

t: "lastHitTimestamp",

j: "joinTimer",

l: "enhancedUserIdLoggedInState",

h: "enhancedUserId",

d: "joinId"

};

var parts = str.split('.');

var version = parts[0];

var result = {};

var entries = [];

if (version === 'GS1') {

// GS1: Create entries from positional values

var values = parts.slice(2);

var keys = ['s', 'o', 'g', 't', 'j', 'l', 'h', 'd'];

for (var i = 0; i < keys.length; i++) {

entries.push([mapping[keys[i]], values[i] || '']);

}

} else {

// GS2: Create entries from prefixed values

var segments = parts[2].replace(/%24/g, '$').split('$');

for (var j = 0; j < segments.length; j++) {

var prefix = segments[j][0];

var value = decodeURIComponent(segments[j].slice(1));

entries.push([mapping[prefix] || prefix, value]);

}

}

// Build result object from entries

for (var k = 0; k < entries.length; k++) {

result[entries[k][0]] = entries[k][1];

}

return result;

}Feel free to use either version based on your project’s requirements.

The parser will return a consistent object structure with descriptive property names, making it easy to work with Google Analytics cookie data in your applications.